My two previous blogs clarified the meaning of the common, if not slightly overused phrase, culture of assessment, and presented assessment as a collective and collaborative process. Of course, no assessment conversation is complete without talking about data. Certainly, data are central to the process of program assessment, but data alone result in one-dimensional assessment. Robust assessment includes two other important dimensions: discussions and decisions. Using this 3Ds model – data, discussions, and decisions – enhances assessment and increases faculty engagement in the process.

Related Reading: The Ins and Outs of Higher Education’s Culture of Assessment

Data, Discussions & Decisions

Let’s start with the obvious – data. Whether a program is drowning in data or struggling to find data, it is important to realize not all data are equal. Effective assessment efforts focus on the most relevant data. By limiting the amount of data to that which is most critical, programs can routinely collect, organize, disperse, and analyze data without overwhelming faculty with these tasks.

But assessment does not live on data alone. Discussions contextualize data, and context matters. As they discuss static data, faculty can recognize program strengths and weaknesses. More importantly, faculty should be encouraged to identify departmental, campus, and/or external dynamics which impact data. Discussions about what may hinder or contribute to student learning make data dynamic and more meaningful to faculty.

Discussions should lead to decisions. Here we need to be wary of another assessment cliché – data-driven decisions. Data-driven decision models discount the very contextual variables faculty should be encouraged to discuss. Data is always situate within programs which must respond to internal and external influences as well as data. When faculty consider both program data and context, data guide rather than dictate decision making. Decisions become data-informed rather than data-driven.

Related Reading: Assessment is a Team Sport: A Collaborative Faculty Process

Making it Happen

Engaging faculty in data-informed decision making takes deliberate effort. Not only does the process need intentional facilitation, faculty involvement must be documented. If you think (or hope) this is just another example of utopian expectations in higher education, you may want to think again. Even a cursory review of the Higher Learning Commission’s accreditation criteria tells us institutions must provide evidence of substantial faculty involvement in this process.

Administrators need not lock faculty in a room full of data charts or spreadsheets to make this happen. A less dramatic approach, can draw faculty into assessment and decision-making processes. Initiating 3D assessment can be as straightforward as this three-step practice.

Step 1. Routinely and systematically collect the most relevant data throughout the course of a semester or term. Distribute the data to those who have the most vested interest in it. Keep in mind, not all faculty members need to review all data. Stick with what is relevant.

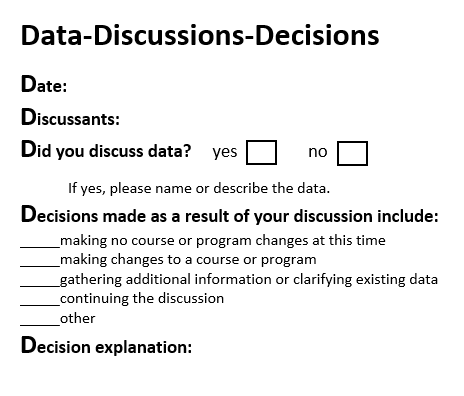

Step 2. Create a simple form, similar to the one in Figure 1, to record the date, topic, and participants in the discussion. Pose open-ended questions which prompt faculty to consider the many factors influencing the data. For example, ask faculty to identify possible barriers to student learning or evaluate the extent to which an assignment, project, or examination is aligned (or not) with program outcomes.

Step 3. On the bottom of the form, document what decisions were made. A full spectrum of choices, ranging from decisions to change nothing to decisions to change an entire program, will validate the consideration of data in conjunction with the program’s context and professional judgement of faculty. Collect the forms as documentation of faculty involvement in assessment and decision making.

Three 3D assessment is really that easy. Most faculty willingly engage when they see the connections between data, discussions, and decisions. These dimensions shift assessment from a bureaucratic distraction unworthy of faculty time to a professional dialogue in which faculty influence important decisions.

Author

Dr. Connie Schaffer is an Assistant Professor in the Teacher Education Department at the University of Nebraska Omaha (UNO). She serves as the College of Education Assessment Coordinator and is involved in campus-wide assessment efforts at UNO. Her research interests include urban education and field experiences of pre-service teachers. She co-authored Questioning Assumptions and Challenging Perceptions: Becoming an Effective Teacher in Urban Environments (with Meg White and Corine Meredith Brown, 2016).

Dr. Connie Schaffer is an Assistant Professor in the Teacher Education Department at the University of Nebraska Omaha (UNO). She serves as the College of Education Assessment Coordinator and is involved in campus-wide assessment efforts at UNO. Her research interests include urban education and field experiences of pre-service teachers. She co-authored Questioning Assumptions and Challenging Perceptions: Becoming an Effective Teacher in Urban Environments (with Meg White and Corine Meredith Brown, 2016).